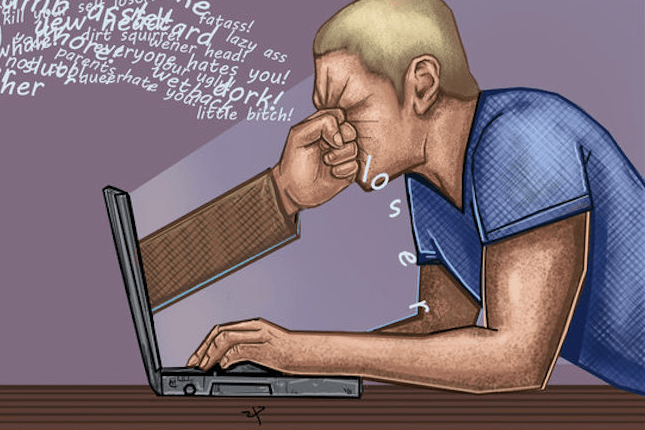

The big social networks and video video games bag did not prioritize user successfully-being over their private growth. In consequence, society is losing the wrestle in opposition to bullying, predators, disfavor speech, misinformation and scammers. Assuredly when a total class of tech companies bag a dire fret they can’t price-successfully solve themselves, a application-as-a-provider emerges to private the gap in web web webhosting, price processing, and hundreds others. So along comesAntiToxin Applied sciences, a brand fresh Israeli startup that desires to relieve web giants repair their abuse troubles with its security-as-a-provider.

It began on Minecraft. AntiToxin co-founder Ron Porat is cybersecurity educated who’d began customary advert blocker Shine. But beautiful below his nose, method to be one of his kids turned into once being mercilessly bullied on the hit teenagers’s sport. If even those most web-savvy folk had been being enormously surprised by online abuse, Porat realized the fret turned into once bigger than shall be addressed by victims attempting to provide protection to themselves. The platforms had to attain more, analysis confirmed.

A most up-to-dateOfcom requestcame all the strategy in which through virtually 80% of teenagers had a doubtlessly repulsive online experience in the previous twelve months. Certainly, 23% talked about they’d been cyberbullied, and 28% of 12 to 15-twelve months-olds talked about they’d got unwelcome fair correct friend or prepare requests from strangers. ADitch The Worth requestcame all the strategy in which through of 12 to twenty-twelve months-olds who’d been bullied online, 42% had been bullied on Instagram.

Sadly, the gigantic scale of the possibility mixed with a wearisome open on policing by top apps makes growth tough with out super spending. Facebook tripled the headcount of its say moderation and security crew, taking a noticeable hit to its revenue, but toxicity persists. Other mainstays love YouTube and Twitter bag but to bag concrete commitments to security spending or staffing, and the close result is non-discontinuance scandals of child exploitation and focused harassment. Smaller companies love Snap or Fortnite-maker Fable Video games would possibly possibly per chance not bag the money to manufacture ample safeguards in-dwelling.

“The tech giants bag confirmed time and time but again we are able to’t depend upon them. They’ve abdicated their responsibility. Other folks must love this fret won’t be solved by these companies” says AntiToxin CEO Zohar Levkovitz, who previously sold his mobile advert company Amobee to Singtel for $321 million. “You will need fresh gamers, fresh pondering, fresh know-how. An organization the assign aside ‘Security’ is the product, not an after-method. And that’s the assign aside we advance-in.” The startup these days raised a multimillion-greenback seed spherical from Mangrove Capital Companions and is allegedly prepping for a double-digit thousands and thousands Series A.

AntiToxin’s know-how plugs into the backends of apps with social communities that either broadcast or message with each and each other and are thereby exposed to abuse. AntiToxin’s systems privately and securely crunch the total on hand signals regarding user behavior and coverage violation reports, from text to videos to blockading. It then can flag a wide series of toxic actions and let the client mediate whether to delete the exercise, suspend the user accountable or how else to proceed in accordance with their phrases and native felony pointers.

By approach to the employ of synthetic intelligence, including pure language processing, machine studying and computer vision, AntiToxin can name the intent of behavior to resolve if it’s malicious. As an instance, the corporate tells me it would distinguish between a married couple consensually exchanging nude shots on a messaging app versus an adult sending harmful imagery to a baby. It would possibly possibly possibly probably per chance even resolve if two early life are swearing at each and each other playfully as they compete in a video sport or if one is verbally harassing the different. The company says that beats the employ of static dictionary blacklists of forbidden phrases.

AntiToxin is below NDA, so it would’t content its client checklist, but claims most up-to-date media attention andlooming regulationsregarding online abuse has ramped up inbound passion. At last the corporate hopes to provide better predictive application to name customers who’ve shown signs of an increasing selection of worrisome behavior so their exercise would possibly possibly per chance be more closely moderated sooner than they lash out. And it’s attempting to provide a “security graph” that will relieve it name destructive actors all the strategy in which through companies so that they would possibly possibly per chance be broadly deplatformed linked to the device Facebook uses records on Instagram abuse to police linked WhatsApp accounts.

“We’re coming advance this very human fret love a cybersecurity company, that is, the total lot is a Zero-Day for us” says Levkowitz, discussing how AntiToxin indexes fresh patterns of abuse it would then survey for all the strategy in which through its purchasers. “We’ve bought intelligence unit alums, PhDs and records scientists creating anti-toxicity detection algorithms that the sector is craving for.” AntiToxin is already having an impact. TechCrunch commissioned it to investigate a tip about child sexual imagery on Microsoft’s Bing search engine. We came all the strategy in which throughBing turned into once in actuality recommending child abuse checklist outcomesto folk that’d performed innocent searches, main Bing tobag adjustmentsto shapely up its act.

AntiToxin identified publicly listed WhatsApp Teams the assign aside child sexual abuse imagery turned into once exchanged

One main possibility to AntiToxin’s enterprise is what’s continuously considered as boosting online security: close-to-close encryption. AntiToxin claims that after companies love Facebook bag bigger encryption, they’re purposefully hiding problematic say from themselves so that they don’t must police it.

Facebook claims it composed can employ metadata about connections on its already encrypted WhatApp community to suspend folk that violate its coverage. However AntiToxin equipped analysis to TechCrunch foran investigation that came all the strategy in which through child sexual abuse imagery sharing groups had been brazenly accessible and discoverable on WhatsApp— in allotment because encryption made them not easy to receive your fingers on for WhatsApp’s computerized systems.

AntiToxinbelieves abuse would proliferate if encryption becomes a exceptional broader model, and it claims the wretchedness that it causes outweighs fears about companies or governments surveiling unencrypted transmissions. It’s a tough name. Political dissidents, whistleblowers and presumably your total thought of civil liberty depend upon encryption. However folk would possibly possibly per chance watch sex offenders and bullies as a more dire fret that’s strengthened by platforms having no thought what folk are asserting inside chat threads.

What appears to be like distinct is that the scream quo has bought to poke. Shaming, exclusion, sexism, grooming, impersonation and threats of violence bag began to feel same old. A tradition of cruelty breeds more cruelty. Tech’s success tales are being marred by alarm tales from their customers. Paying to bag up fresh weapons in the fight in opposition to toxicity appears to be like love an affordable investment to search records from.

![[NEWS] Max-Q: This week in space – Loganspace [NEWS] Max-Q: This week in space – Loganspace](https://i0.wp.com/loganspace.com/wp-content/uploads/2019/10/18952/news-max-q-this-week-in-space-8211-loganspace.jpg?resize=218%2C150&ssl=1)

![[Science] Weird repeating signals from deep space may be created by starquakes – AI [Science] Weird repeating signals from deep space may be created by starquakes – AI](https://i0.wp.com/loganspace.com/wp-content/uploads/2019/10/18330/science-weird-repeating-signals-from-deep-space-may-be-created-by-starquakes-8211-ai.jpg?resize=218%2C150&ssl=1)

![[NEWS] Relativity, a new star in the space race, raises $160 million for its 3-D printed rockets – Loganspace [NEWS] Relativity, a new star in the space race, raises $160 million for its 3-D printed rockets – Loganspace](https://i0.wp.com/loganspace.com/wp-content/uploads/2019/10/18141/news-relativity-a-new-star-in-the-space-race-raises-160-million-for-its-3-d-printed-rockets-8211-loganspace.jpg?resize=218%2C150&ssl=1)

![[NEWS] Relativity Space signs its the satellite transportation company Momentus as its first customer – Loganspace [NEWS] Relativity Space signs its the satellite transportation company Momentus as its first customer – Loganspace](https://i0.wp.com/loganspace.com/wp-content/uploads/2019/09/17152/news-relativity-space-signs-its-the-satellite-transportation-company-momentus-as-its-first-customer-8211-loganspace.jpg?resize=218%2C150&ssl=1)

![[NEWS] In latest $10B JEDI contract twist, Defense Secretary recuses himself – Loganspace [NEWS] In latest $10B JEDI contract twist, Defense Secretary recuses himself – Loganspace](https://i0.wp.com/loganspace.com/wp-content/uploads/2019/10/19024/news-in-latest-10b-jedi-contract-twist-defense-secretary-recuses-himself-8211-loganspace.jpg?resize=218%2C150&ssl=1)

![[NEWS] Exclusive: As North Korea expands arsenal, Japan’s missile defense shield faces unforeseen costs – sources – Loganspace AI [NEWS] Exclusive: As North Korea expands arsenal, Japan’s missile defense shield faces unforeseen costs – sources – Loganspace AI](https://i0.wp.com/loganspace.com/wp-content/uploads/2019/09/17971/news-exclusive-as-north-korea-expands-arsenal-japan8217s-missile-defense-shield-faces-unforeseen-costs-8211-sources-8211-loganspace-ai.jpg?resize=218%2C150&ssl=1)

![[NEWS] China destabilizing Indo-Pacific: U.S. Defense Secretary – Loganspace AI [NEWS] China destabilizing Indo-Pacific: U.S. Defense Secretary – Loganspace AI](https://i0.wp.com/loganspace.com/wp-content/uploads/2019/08/15296/news-china-destabilizing-indo-pacific-us-defense-secretary-8211-loganspace-ai.jpg?resize=218%2C150&ssl=1)

![[NEWS] U.S. Defense Secretary says he favors placing missiles in Asia – Loganspace AI [NEWS] U.S. Defense Secretary says he favors placing missiles in Asia – Loganspace AI](https://i0.wp.com/loganspace.com/wp-content/uploads/2019/08/15258/news-us-defense-secretary-says-he-favors-placing-missiles-in-asia-8211-loganspace-ai.jpg?resize=218%2C150&ssl=1)

![news-antitoxin-sells-safetytech-to-clean-up-poisoned-platforms-8211-loganspace.jpg [NEWS] AntiToxin sells safetytech to clean up poisoned platforms – Loganspace](https://i0.wp.com/loganspace.com/wp-content/uploads/2019/06/11335/news-antitoxin-sells-safetytech-to-clean-up-poisoned-platforms-8211-loganspace.jpg?resize=696%2C391&ssl=1)

![[NEWS] Legged lunar rover startup Spacebit taps Latin American partners for Moon mission – Loganspace [NEWS] Legged lunar rover startup Spacebit taps Latin American partners for Moon mission – Loganspace](https://i0.wp.com/loganspace.com/wp-content/uploads/2019/10/19064/news-legged-lunar-rover-startup-spacebit-taps-latin-american-partners-for-moon-mission-8211-loganspace.png?resize=218%2C150&ssl=1)

![[NEWS] Vendr, already profitable, raises $2M to replace your enterprise sales team – Loganspace [NEWS] Vendr, already profitable, raises $2M to replace your enterprise sales team – Loganspace](https://i0.wp.com/loganspace.com/wp-content/uploads/2019/10/19062/news-vendr-already-profitable-raises-2m-to-replace-your-enterprise-sales-team-8211-loganspace.jpg?resize=218%2C150&ssl=1)

![[NEWS] Will ‘The Prince’ dethrone ‘King Bibi’? Israelis ex-military chief aims at premiership – Loganspace AI [NEWS] Will ‘The Prince’ dethrone ‘King Bibi’? Israelis ex-military chief aims at premiership – Loganspace AI](https://i0.wp.com/loganspace.com/wp-content/uploads/2019/10/19058/news-will-8216the-prince8217-dethrone-8216king-bibi8217-israelis-ex-military-chief-aims-at-premiership-8211-loganspace-ai.jpg?resize=218%2C150&ssl=1)